PYNQ-DPU on CorazonAI

Introduction

This article shows how to create a PYNQ-DPU application on CorazonAI with the acceleration of the whole AI pipeline flow for video analytics . Specifically this article is a follow-up of the previous article on CorazonAI which can be found here: https://www.makarenalabs.com/pynq-on-corazon-ai-ai-applications-for-all/

AI pipeline and PYNQ

PYNQ is a framework which allows us to use python bindings on the FPGA.

However, having the bindings for python, doesn’t necessarily just mean the possibility of working very easily with our custom IP cores.

In fact, python is a gold standard programming language in computer science and one of its main uses is related to AI.

Python offers a set of APIs for BLAS, DSP and numerical applications thanks to its library NumPy and the bindings with OpenCV.

The usage of those libraries is for pre-process and post-process of the data that the AI model is using, from classical graph based AI algorithms to modern complex Deep Neural Network.

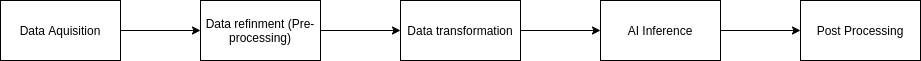

In conclusion for this introduction we post a typical working pipeline for AI:

It is important to see that the pipeline does NOT include just the inference. Sometimes we see impressive performances declared by companies, but very few times we see the performances for the WHOLE pipeline.

So, moving forward, is it possible to accelerate the complete pipeline for video analytics application for every stage? The answer is yes, and the answer to which board we should use is CorazonAI. How do we build a complete pipeline for CorazonAI? We now detail every step.

Creation of a custom platform

First we need to identify eventual heavy pre-process functions, such as resizes, color changing and high (or low) pass filtering. For those functions we can create an IP core thanks to Vitis HLS. This tool allows the user to synthesize code from C++ to RTL, without any previous knowledge of digital hardware design. Here the link to the UG page:

https://www.xilinx.com/html_docs/xilinx2021_1/vitis_doc/introductionvitishls.html

Remember, when selecting the part, that CorazonAI is a ZU5 device, with -1 of speed grade.

Before moving on and creating the design, we must have in mind what is our final goal. Our final goal is to create a platform which should integrates the IPs to accelerate all the pipeline stages. For AI Inference Xilinx provides to the end engineers an IP called DPU (Deep Learning Processing Unit).

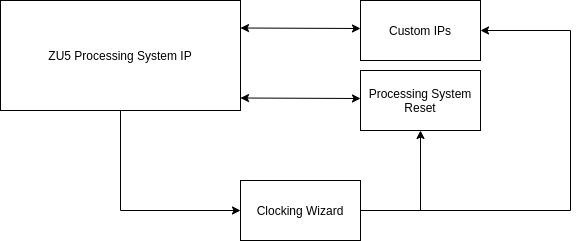

Second we need to integrate in a platform all the IPs. I recall that after this first step we have a set of IPs, which are the accelerated functions from the previous step. We now proceed in the creation of the platform, which integrates all the IPs except for the DPU. To do that we must use Vivado IPI (IP Integrator). The steps are well detailed here: https://github.com/Xilinx/Vitis-Tutorials/blob/2021.1/Vitis_Platform_Creation/Introduction/02-Edge-AI-ZCU104/step1.md

The link shows how to create a Vivado design for a valid platform. Remember that our platform is a ZU5 (base architecture of CorazonAI). At the end we should have a schematic like this:

Finally we have a complete hardware stack which can be used as a hardware reference platform.

Platform Software Stack

It’s high time to create a software stack on top of this hardware platform. To do so, we follow this next step: https://github.com/Xilinx/Vitis-Tutorials/blob/2021.1/Vitis_Platform_Creation/Introduction/02-Edge-AI-ZCU104/step2.md

In order to create this software stack we need to create a minimal petalinux image which builds packages for the specific architecture. For this step we need to use the ptalinux suites offered by Xilinx, here the link to the download page: https://www.xilinx.com/support/download/index.html/content/xilinx/en/downloadNav/embedded-design-tools.html

In conclusion of this section, before leaving to integrate the DPU on the platform, we need to build an actual Vitis Platform. To do so we need to have the Vitis stack downloaded on our pc, here’s the link: https://www.xilinx.com/support/download/index.html/content/xilinx/en/downloadNav/vitis.html

The step to follow to have a final working platform is here in this link: https://github.com/Xilinx/Vitis-Tutorials/blob/2021.1/Vitis_Platform_Creation/Introduction/02-Edge-AI-ZCU104/step3.md

Deploy on target board

In the end we have succeeded in creating our base platform. This platform can be used in every Vitis project, not only for this one.

In order to link the DPU and create a whole accelerated working flow AI pipeline, the PYNQ team (I love you guys <3) comes in our support.

First, at this link: https://github.com/Xilinx/DPU-PYNQ it is possible to find a repo with the instructions and already made Makefiles to link the DPU against our platform.

Second, to replicate the flow we need to go in the folder “boards” (here the link: https://github.com/Xilinx/DPU-PYNQ/tree/master/boards) we need to copy&paste the instructions as they are written to obtain a working design. This design is portable and works also with other flows (such as XRT or OpenCL) not just PYNQ!

Finally we need to install the dependencies of PYNQ on our board (here the link: https://github.com/Xilinx/DPU-PYNQ) and try the jupyter notebooks!

We will soon release (open source) the image for CorazonAI.

Thank you for reading the article.

Enjoy your acceleration,

Guglielmo Zanni

Principal Scientist @MakarenaLabs S.R.L.

[…] Do you want to use Python, MuseBox and FPGA altogether? Check out our latest article: PYNQ-DPU on CorazonAI […]